Music, Language and writing (MuLaW) Functional relationships between music, language and writing

Team leader

Research topics

Our aim is to explore how music and writing contribute to language learning. To address these general questions we will use sophisticated measures of behavior (auditory psychophysics, finegrained kinematics) together with different measures of brain activity and brain structure (ERPs, functional and structural MRI) both in children and in adults, without and with learning disabilities (or movement disorders). A critical factor for an efficient approach of learning is the development of optimal training paradigms, which are at the core of all the proposed projects.

1. Music training and word learning

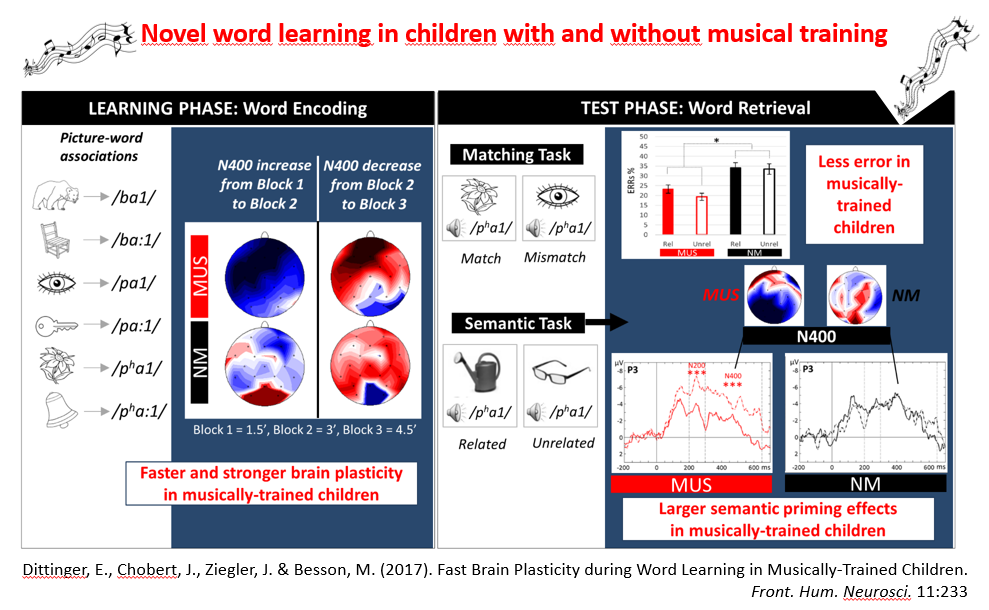

Thanks to the support of the ILCB (http://ilcb.fr/), we are currently conducting a series of experiments in professional musicians, in musically trained children (8-12 year-old) and in control participants using a novel word-learning paradigm that allows examining changes in brain activity during both a study phase and a test phase. This paradigm includes a syllabic categorization task, a word learning study phase (through picture-word associations) and a test phase comprising a matching task (to determine whether participants had learned the associations) and a semantic task (to test for the generalization of 70 word learning to semantic associates). The aim is to examine the influence of musical expertise on word learning. The first results are very exciting in showing fast brain plasticity in both children and adults, as reflected by the emergence of an N400 after only three minutes of learning picture-word associations as well as stabilized N400 effects in the matching and semantic tasks (Figure 1). Most importantly, these effects are larger and develop faster in musicians than in controls, and they are accompanied by higher level of word learning performance. These findings strongly highlight the positive influence of music training on word learning, one of the most specifically human abilities.

2. Writing training and word learning

2.1. Learning how to write words: impact of digital tools in children, adolescents and adults.

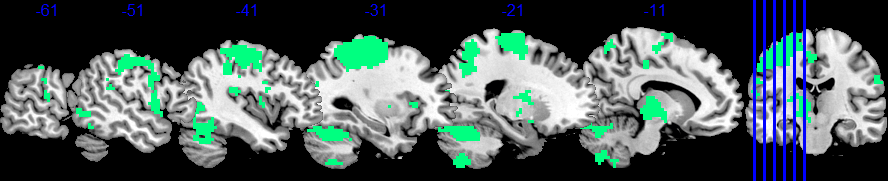

Writing, like music, is an acquired expertise, driven by cultural factors. Up to now, we focused on the neural basis of handwriting and reading in adults (see figure below), mostly at the level of single letters. Now, we will 1) study writing and reading at the scale of whole words, considering the orthographic, phonological and semantic levels; 2) compare “traditional” handwriting (pen) vs writing with new digital tools (keyboards) and 3) use a developmental approach, testing adults, adolescents and children. This research has a strong societal impact for Education and for acquisition of literacy.

This general approach will be developed in two projects. The first one aims at studying the development of orthographic skills in 8 to 12 years old and the emergence of functional specificity in writing neural networks (funded by ANR: “ECRIRE”). The second one will compare the effects of learning a new language based on a different writing system (Arabic) either with a pen or with a tablet keyboard (funded by e-Fran: “ARABESC”, in coll. with ESPE researchers). Using a longitudinal approach with 120 children over their four years of middle school education, we will test the impact of learning a new graphic system using behavioral, MRI and fMRI methods.

These projects will allow the construction of a database of structural and diffusion MRI data in adults and children whom reading and writing habits and skills will be precisely quantified. We will therefore be able to assess the possible structural brain modifications driven by writing learning and expertise.

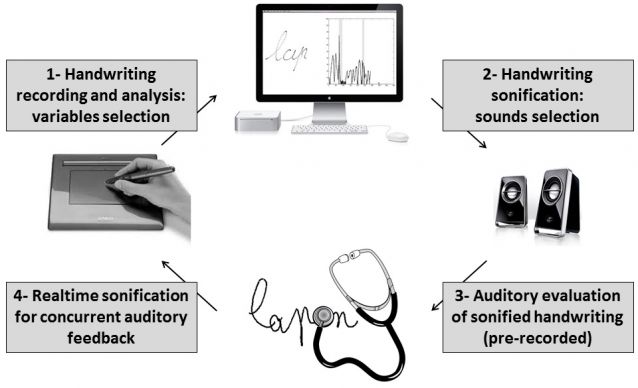

2.2. Multisensory integration in sonified handwriting

The aim is to understand how the brain integrates multimodal information (from vision, proprioception and audition) during learning of handwriting. An interesting way of studying multisensory integration is to use the paradigm of sensory weighting that consists in modulating the level of congruence between auditory, visual and proprioceptive information during different learning steps. We will then investigate the neural substrate of multisensory integration using EEG recorded during handwriting production. M. Besson uses this method in our team and the team of J. Blouin in the laboratory uses EEG for studying visuo-proprioceptive conflict (J. Danna is involved in this study). We will also use fMRI to examine how the neural networks allowing the control of handwriting are modulated by the presence/absence of visual and auditory feedback, and by the congruence between them. This is now possible thanks to the MRI compatible digital tablet developed by our team, which allows the on line display of the trace on a screen (Longcamp et al., 2014).

Finally, in Parkinson’s disease. Studying multisensory integration (proprioceptive, visual, and auditory) in Parkinson’s disease will allow addressing two questions. First, can sonification act as a “sensory prosthesis” helping parkinsonian patients suffering from a proprioceptive deficit to feel their movements better? In other words, we plan to evaluate the impact of substituting audition to proprioception in Parkinson’s disease. Second, can sonification improve motor control in Parkinson’s disease? The specific aim is to determine whether using real-time auditory cueing (with sonification) allows patients to use brain areas not affected by Parkinson’s disease, as already observed in the case of music-based movement therapy.